Predictability is often one of the goals organisations seek with their agile teams but, in the complex domain, how do you move past say-do as a measurement for predictability? This post details how our teams at ASOS Tech can objectively look at this whilst accounting for variation and complexity in their work…

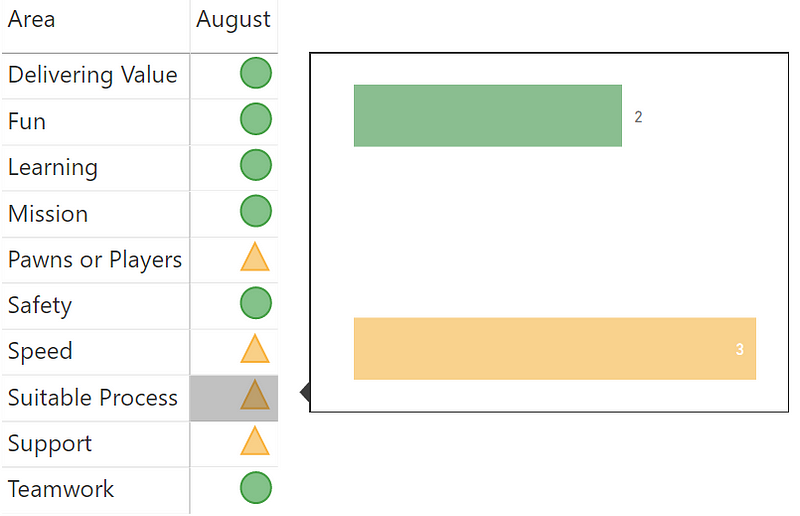

Predictability is often the panacea that many delivery teams and organisations seek. To be clear, I believe predictability to be one of a balanced set of themes (along with Value, Flow, Delivery and Culture), that teams and organisations should care about when it comes to agility.

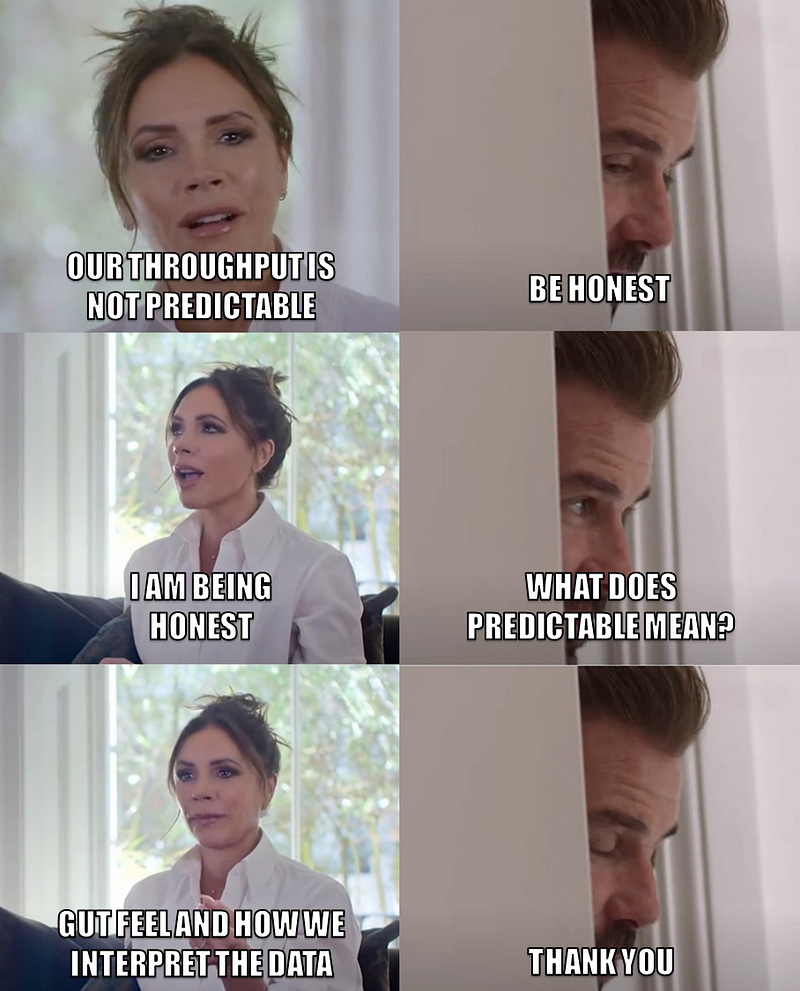

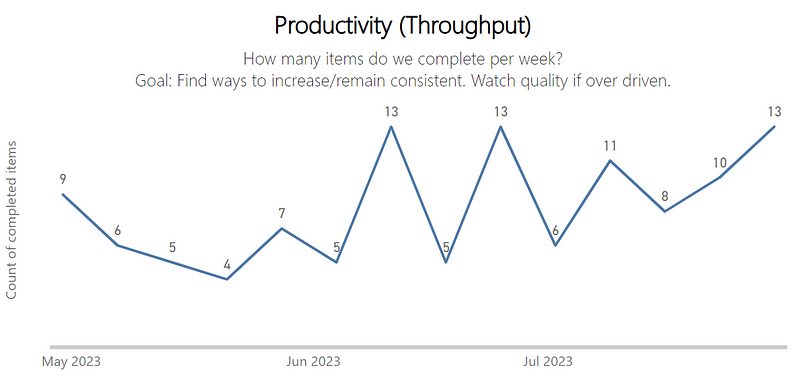

Recently, I was having a conversation around this topic with one of our Lead Software Engineers about his team. He explained how the team he leads had big variation in their weekly Throughput and therefore were not predictable, with the chart looking like so:

Upon first glance, my view was the same. The big drops and spikes suggested too much variation for this to be useful from a forecasting perspective (in spite of the positive sign of an upward trend!) and that this team was not predictable.

The challenge, as practitioners, is how do we validate this perspective?

Is there a way that we can objectively measure predictability?

What predictability is not

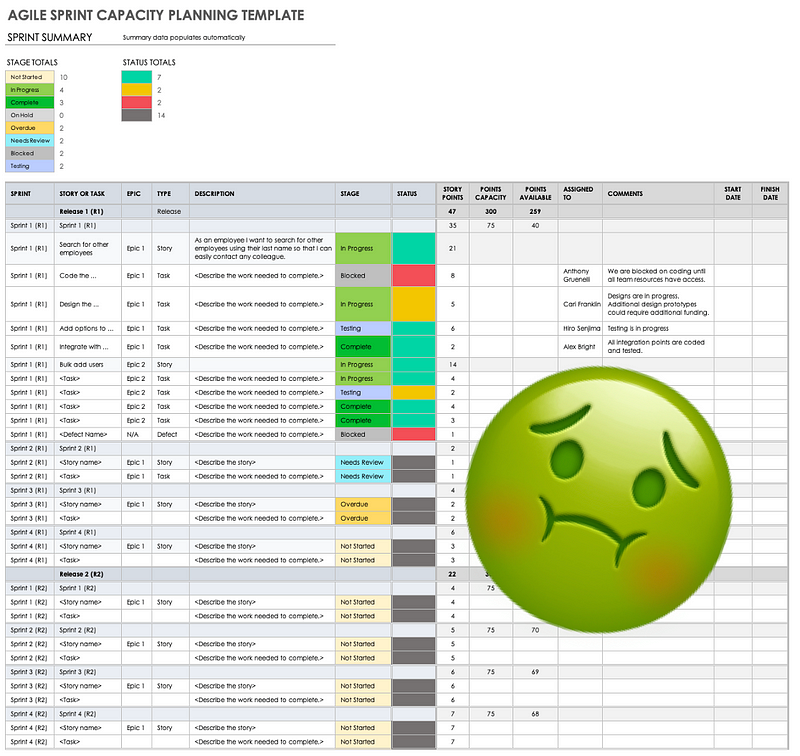

Some of you may be reading and saying that planned vs. actual is how we can/should measure predictability. Often referred to as “say-do ratio”, this once was a fixture in the agile world with the notion of “committed” items/story points for a sprint. Sadly, many still believe this is a measure to look at, when in fact the idea of a committed number of items/points left the Scrum Guide more than 10 years ago. Measuring this has multiple negative impacts on a team, which this fantastic blog from Ez Balci explains.

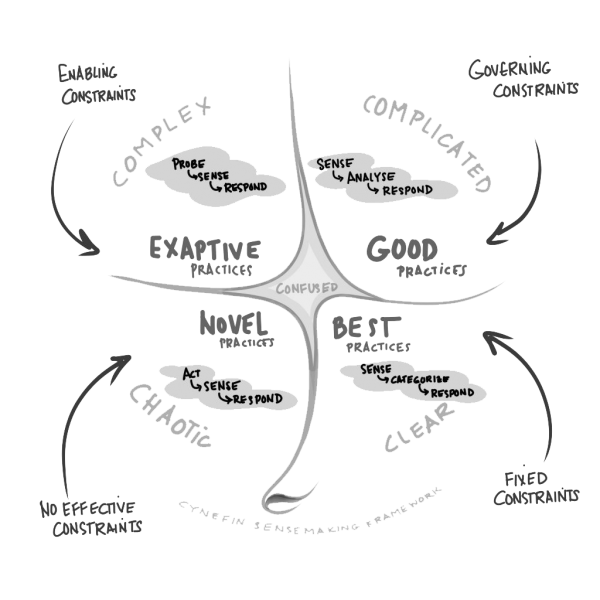

Planned Vs. Actual / Committed Vs. Delivered / Say-Do are all measurement relics of the past we need to move on from. These are appropriate when the work is clear, for example when I go to the supermarket and my wife gives me a list of things we need, did I get what we needed? Did I do what I said I was going to do? Software development is complex, we are creating something (features, functionality, etc.) from nothing through writing lines of code.

About — Cynefin Framework — The Cynefin Co

Thinking about predictability as something that is ‘black and white’ like those approaches encourage simply does not work, therefore we need a better means of looking at predictability that considers this.

What we can use instead

Karl Scotland explored similar ideas around predictability in a blog post, specifically looking at the difference in percentiles of cycle time data. For example if there is a significant difference in your 50th percentile compared to your 85th percentile. This is something that as a Coach I also look at, but more to understand variation than being predictable. Karl himself shared in a talk after exploring the ideas from the blog further how this was not a useful measure around predictability.

Which brings us on to how we can do it, using a Process Behaviour Chart (PBC). A PBC is a type of graph that visualises the variation in a process over time. It consists of a running record of data points, a central line that represents the average value, and upper and lower limits (referred to as Upper Natural Process Limit — UNPL and Lower Natural Process Limit — LNPL) that define the boundaries of routine variation. A PBC can help to distinguish between common causes and exceptional causes of variation, and to assess the predictability and stability of a process.

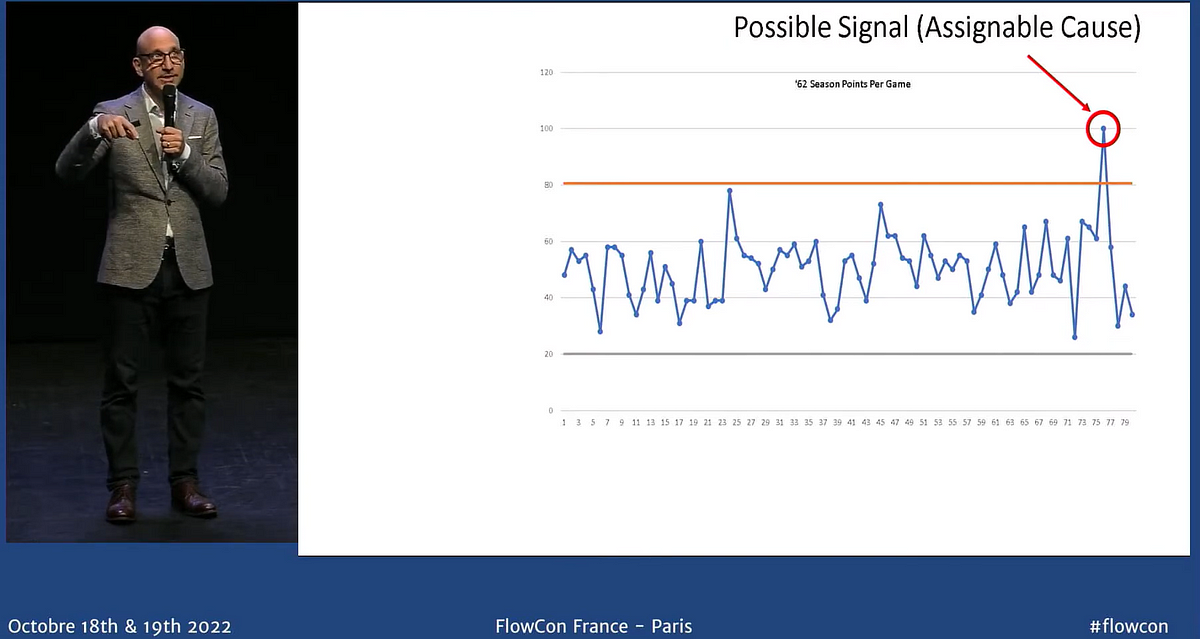

I first gained exposure to this chart through watching the Lies, damned lies, and teens who smoke talk from Dan Vacanti, as well as learning more through one of my regular chats with a fellow coach, Matt Milton. Whilst I will try my best not to spoil the talk, Dan looks at Wilt Chamberlains points scoring over the 1962 season in a PBC and in particular if the 100 point game should be attributed to what some say it was.

Dan Vacanti — Lies, Damned Lies, and Teens Who Smoke

In his new book, Actionable Agile Metrics Volume II: Advanced Topics in Predictability, Dan goes to great lengths in explaining the underlying concepts behind variation and how to calculate/visualise PBCs for all four flow metrics of Throughput, Cycle Time, Work In Progress (WIP) and Work Item Age.

With software development being complex, we have to accept that variation is inevitable. It is about understanding how much variation is too much. PBCs can highlight to us when a team's process is predictable (within our UNPL and LNPL lines) or unpredictable (outside our UNPL and LNPL lines). It therefore can (and should) be used as an objective measurement of predictability.

Applying to our data

If we take our Throughput data shown at the beginning and put it into a PBC, we can now get a sense for if this team is predictable or not:

We can see that in fact, this team is predictable. Despite us seemingly having lots of up and down values in our Throughput, all those values are within our expected range. It is worth noting that Throughput is the type of data that is zero bound as it is impossible for us to have a negative Throughput. So, by default, our LNPL is considered to be 0.

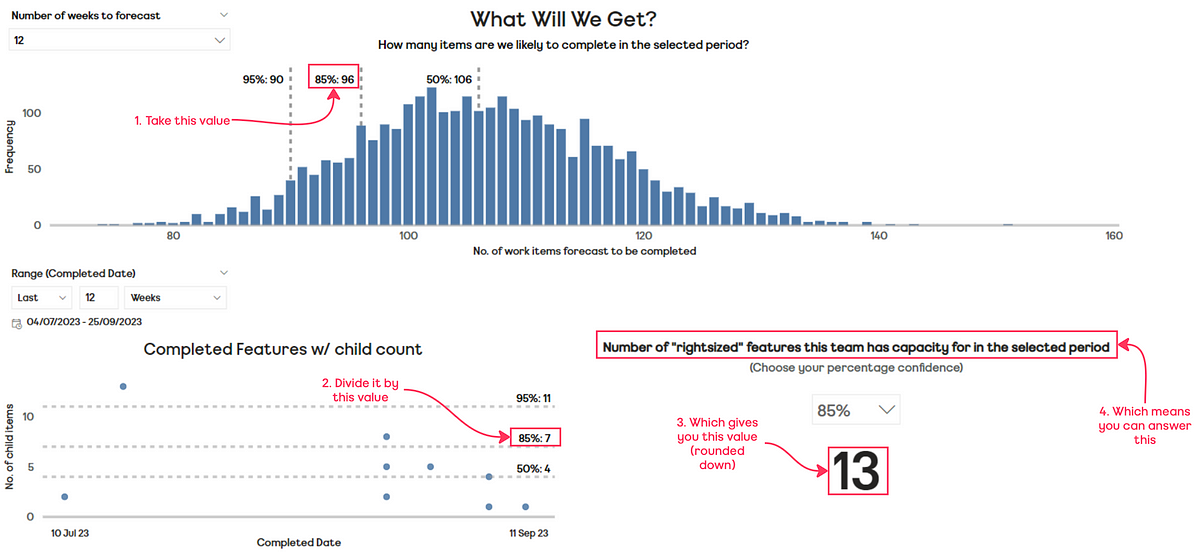

Another benefit of these values being predictable is that it also means that we can confidently use this data as input for forecasting delivery of multiple items using Monte Carlo simulation.

What about the other flow metrics?

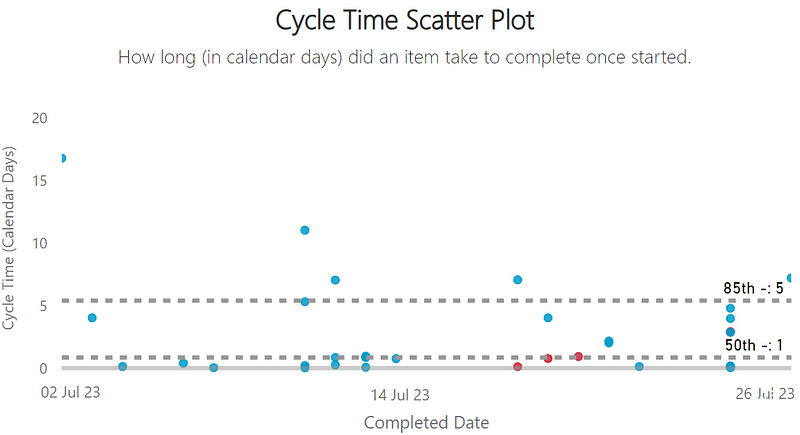

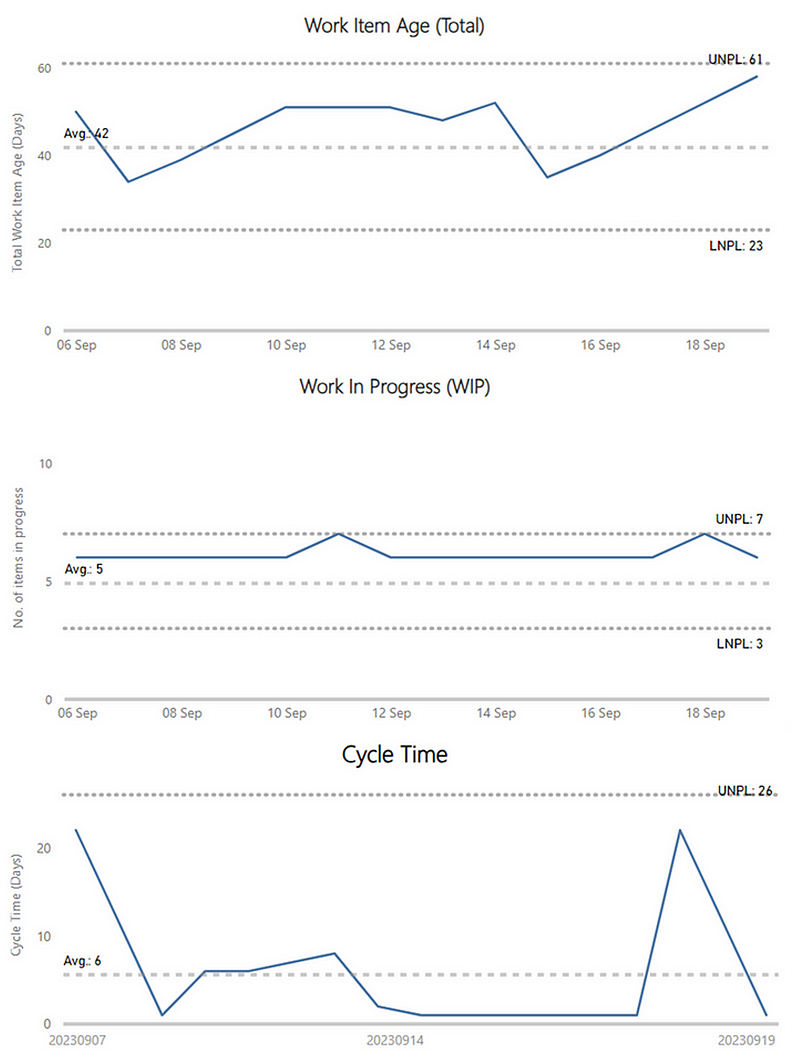

We can also look at the same chart for our Cycle Time, Work In Progress (WIP) and Work Item Age flow metrics. Generally, 10–20 data points is the sweet spot for the baseline data in a PBC (read the book to understand why), so we can’t quite use the same time duration as our Throughput chart (as this aggregated weekly for the last 14 weeks).

If we were to look at the most recent completed items in that same range and their Cycle Time, putting it in a PBC gives us some indication as to what we should be focusing on:

The highlighted item would be the one to look at if you were wanting to use cycle time as an improvement point for a team. Something happened with this particular item that made it significantly different than all the others in that period. This is important as, quite often, a team might look at anything above their 85th percentile, which for the same dataset looks like so:

That’s potentially four additional data points that a team might spend time looking at which were in fact just routine variation in their process. This is where the PBC helps us, helping to separate signal from noise.

With a PBC for Work In Progress (WIP), we can get a better understanding around where our WIP has increased to the point of making us unpredictable:

We often would look to see if we are within our WIP limits when in fact, there is also the possibility (as shown in this chart) of having too low WIP, as well as too high. There may be good reasons for this, for example keeping WIP low as we approach Black Friday (or as we refer to it internally as— Peak) so there is capacity if teams need to work on urgent items.

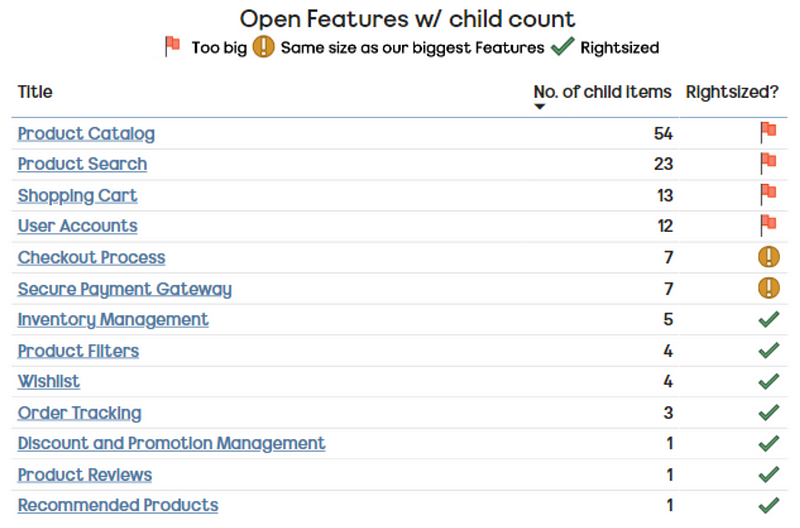

Work Item Age is where it gets the most interesting. As explained in the book, looking at this in a PBC is tricky. Considering we look at individual items and their status, how can we possibly put this in a chart that allows us to look at predictability? This is where tracking Total Work Item Age (which Dan credits to Prateek Singh) helps us:

Total Work Item Age is simply the sum of the Ages of all items that are in progress for a given time period (most likely per day). For example, let’s say you have 4 items currently in progress. The first item’s Age is 12 days. The second item’s Age is 2 days. The third’s is 6 days, and the fourth’s is 1 day. The Total Age for your process would be 12 + 2 + 6 + 1 = 21 days…using the Total Age metric a team could see how its investment is changing over time and analyse if that investment is getting out of control or not.

Plotting this gives new insight, as a team may well be keeping their WIP within limits, yet the age of those items is a cause for concern:

Interestingly, when discussing this in the ProKanban Slack, Prateek made the claim that he believes Total Work Item Age is the new “one metric to rule them all”. Stating that keeping this within limits and the other flow metrics will follow…and I think he might be onto something:

Summary

So, what does this all mean?

Well, for our team mentioned at the very beginning, the Lead Software Engineer can be pleased. Whilst it might not look it on first glance, we can objectively say that from a Throughput perspective their team is in fact predictable. When looking at the other flow metrics for this team, we can see that we still have some work to be done to understand what is causing the variation in our process.

As Coaches, we (and our teams) have another tool in the toolbox that allows us and our teams to quickly, and objectively, validate how ‘predictable’ they are. Moving to something like this allows for an objective lens on predictability, rather than relying on differing opinions of people who are interpreting data different ways. To be clear, predictability is of course not the only thing (but it is one of many) that matters. If you’d like to try the same for your teams, check out the template in this GitHub repo (shout out to Benjamin Huser-Berta for collaborating on this as well — works for both Jira and Azure DevOps).