Psychological Safety

A trip to Manchester this week involved interviewing for a new Product Manager role, making a point of focusing on questions centered around outcomes people had experienced (both good and bad). I was really impressed in particular during one of the interviews where a candidate mentioned around the importance of psychological safety for the team. This individual even went on to reference Amy Edmondson when I queried further, which was even more impressive! It’s clear now that more and more practitioners are aware of the importance of psychological safety in their environment with it often being the focus of talks at conferences, meetups etc.

For those unfamiliar, psychological safety refers to:

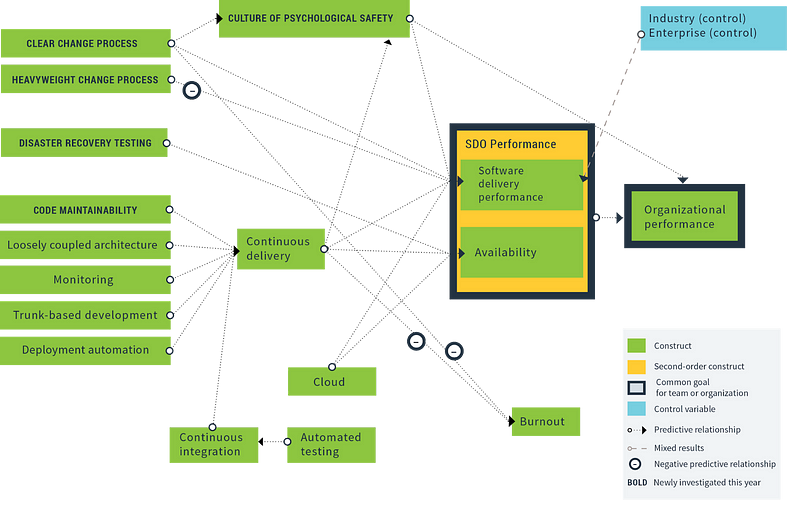

As part of Project Aristotle, Google found it to be the top key dynamic that set successful teams apart from other teams at Google. It also is referenced in this years State of DevOps report as positively impact software delivery performance.

The 2019 Accelerate State of DevOps

In our ways of working (WoW) programme, tracking this will be really important. Measuring it is no doubt hard, however there are some useful articles already that may help us in this aspect of our journey.

Dev+Ops != DevOps

This week we’ve taken a look at some of pilot teams in our WoW programme and planning the next steps in bringing them closer together in the value stream. With a traditional horizontal slicing of roles, we now need to rebuild that relationship between Dev and Ops into the same team, however that means for the time being we’re still going to have a bit of a split, something which we need to address in the coming months. Putting ‘DevOps’ teams together is not as simple as just chucking them together, it’s largely cultural based, with the right tooling to support them to work together.

Looking at where I would rank our organisation using DORA’s quick check, I’d suggest we’re at the following:

Which shows how much work we have to do/some of the challenges we’re facing. On the operational side we’re pretty strong in time to restore service, however we need to do a lot more in building that trust around deployments. The hope in the coming months is to pair up 1 x Dev and 1 x Ops together, in order to grow that capability around DevOps.

Next Week

Next week I’m helping put together some material on Lean-Agile Portfolio management for one of our clients, hoping to weave in some of the metrics we’re using currently, as well as that we’re looking at agreeing our next pilot team(s) and starting them on their Agile journey.